Full Publication List (chronological) |

|

Visionary: The World Model Carrier Built on WebGPU-Powered Gaussian Splatting Platform

Yuning Gong, Yifei Liu, Yifan Zhan, Muyao Niu, Xueying Li, Yuanjun Liao, Jiaming Chen, Yuanyuan Gao, Jiaqi Chen, Minming Chen, Li Zhou, Yuning Zhang, Wei Wang, Xiaoqing Hou, Huaxi Huang, Shixiang Tang, Le Ma, Dingwen Zhang, Xue Yang, Junchi Yan, Yanchi Zhang, Yinqiang Zheng, Xiao Sun, Zhihang Zhong arXiv, 2025 project page / paper / code We build an open platform via WebGPU and ONNX Runtime, enabling real-time rendering of diverse Gaussian Splatting variants (3DGS, MLP-based 3DGS, 4DGS, Neural Avatars). |

|

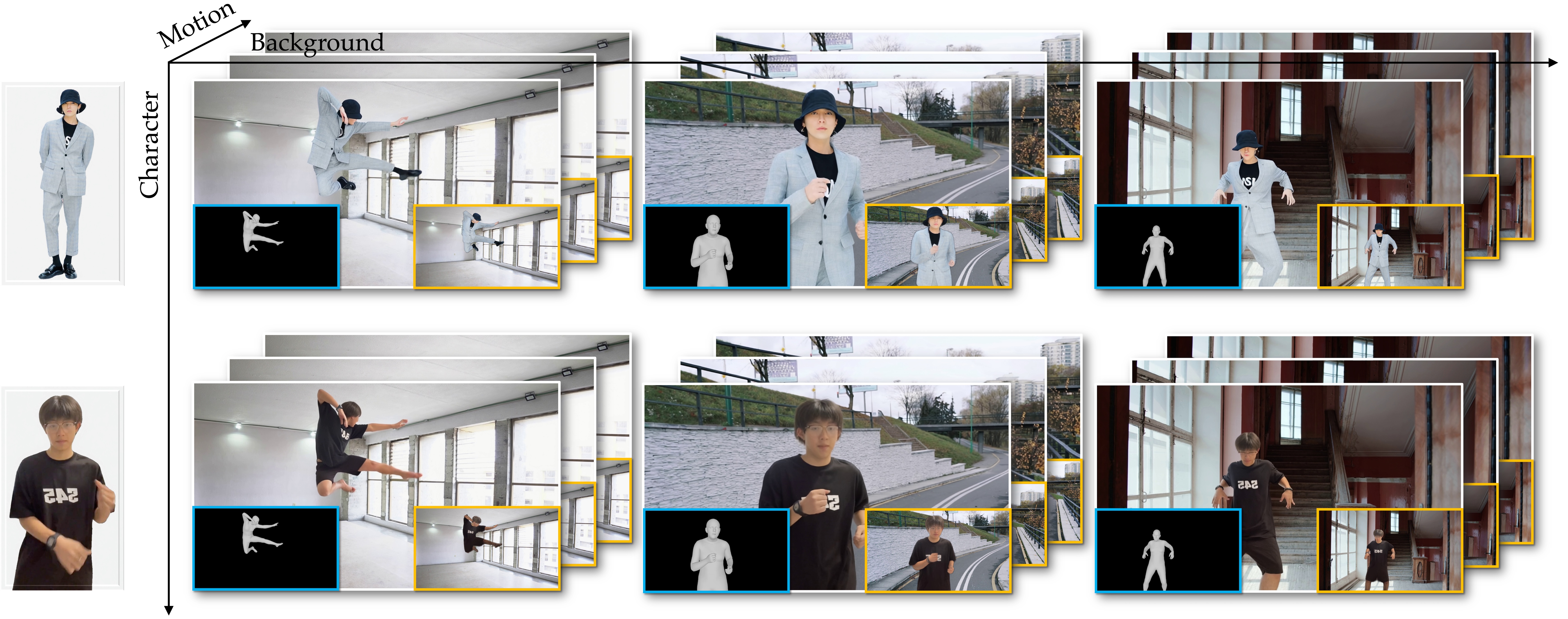

AniCrafter: Customizing Realistic Human-Centric Animation via Avatar-Background Conditioning in Video Diffusion Models

Muyao Niu, Mingdeng Cao, Yifan Zhan, Qingtian Zhu, Mingze Ma, Jiancheng Zhao, Yanhong Zeng, Zhihang Zhong, Xiao Sun, Yinqiang Zheng arXiv, 2025 project page / paper / code We leverage "3DGS Avatar + Background Video" as guidance for the video diffusion model to insert and animate anyone into any scene following given motion sequence. |

|

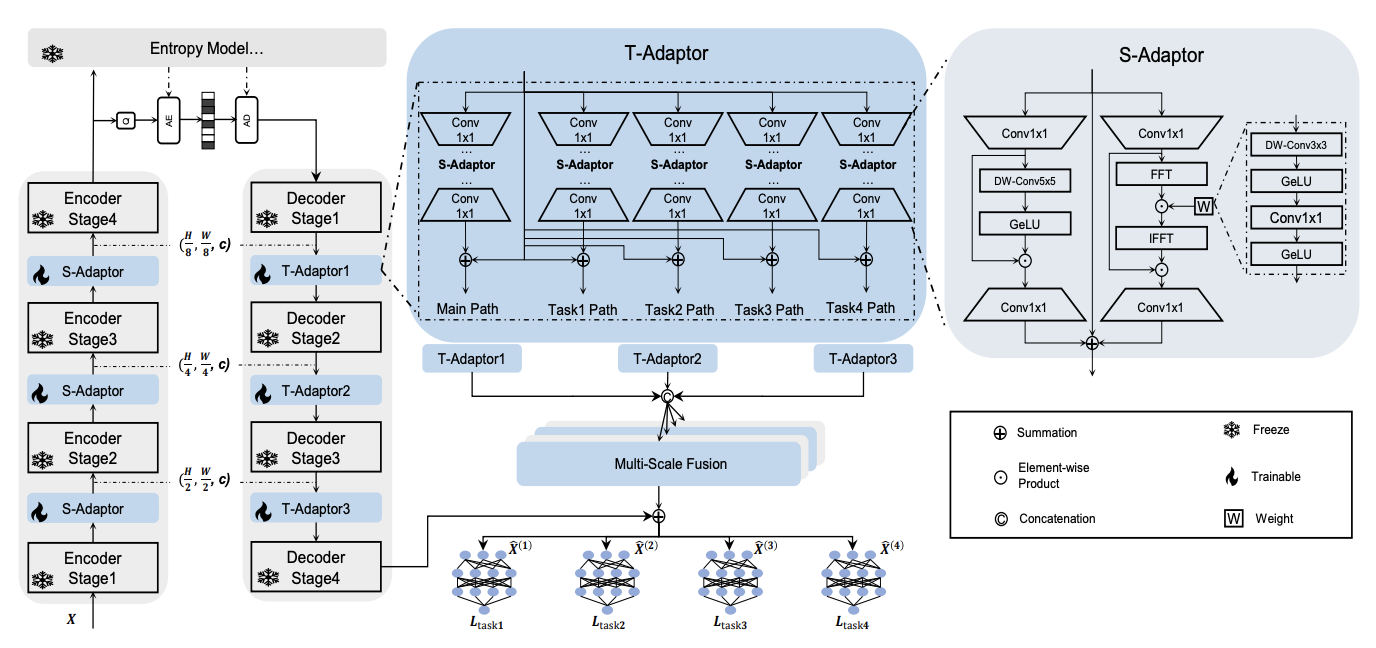

All-in-One Transferring Image Compression from Human Perception to Multi-Machine Perception

Jiancheng Zhao, Xiang Ji, Zhuoxiao Li, Zunian Wan, Weihang Ran, Mingze Ma, Muyao Niu, Yifan Zhan, Cheng-Ching Tseng, Yinqiang Zheng arXiv, 2025 paper / code An asymmetric adaptor framework bridges learned image compression and multi-task vision with a single compact model. |

|

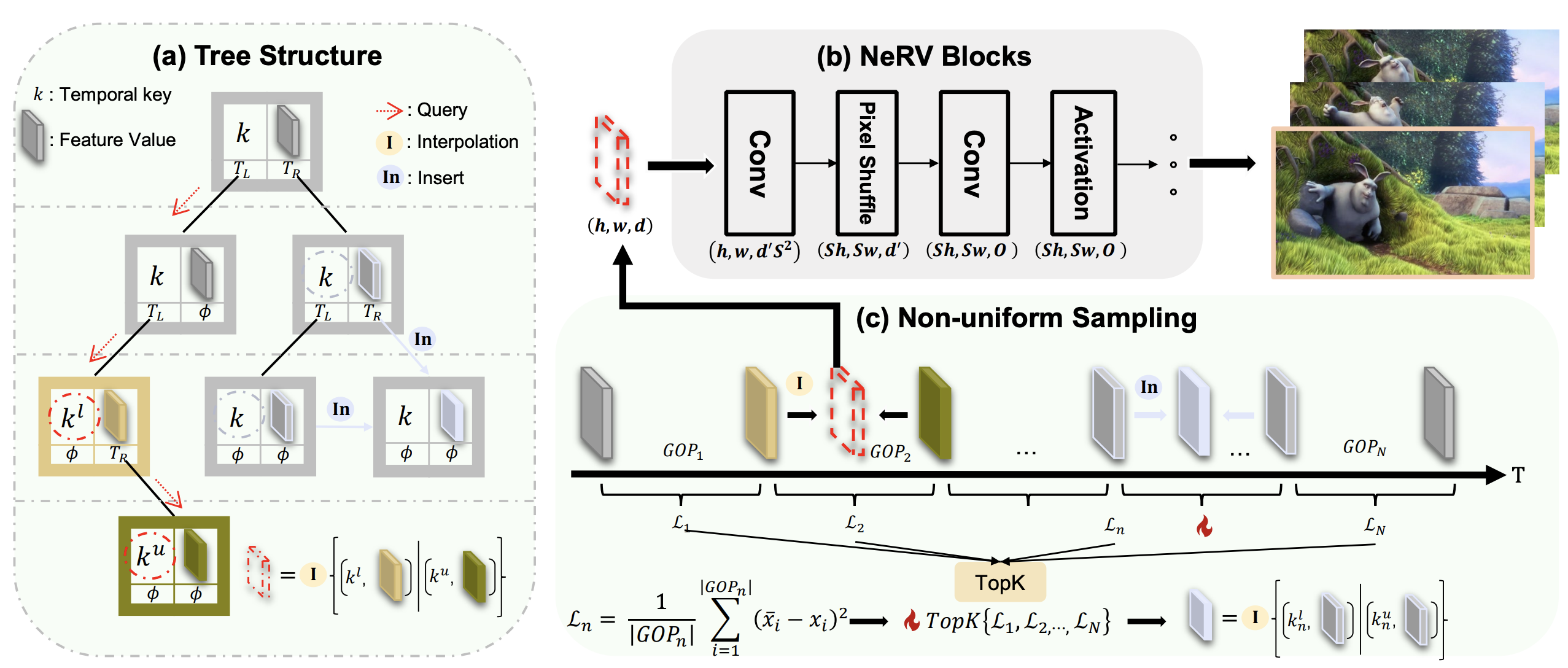

Tree-NeRV: A Tree-Structured Neural Representation for Efficient Non-Uniform Video Encoding

Jiancheng Zhao, Yifan Zhan, Qingtian Zhu, Mingze Ma, Muyao Niu, Zunian Wan, Xiang Ji, Yinqiang Zheng ICCV, 2025 paper / code We propose Tree-NeRV, a novel tree-structured feature representation for efficient and adaptive video encoding. Unlike conventional approaches, Tree-NeRV organizes feature representations within a Binary Search Tree (BST), enabling non-uniform sampling along the temporal axis. |

|

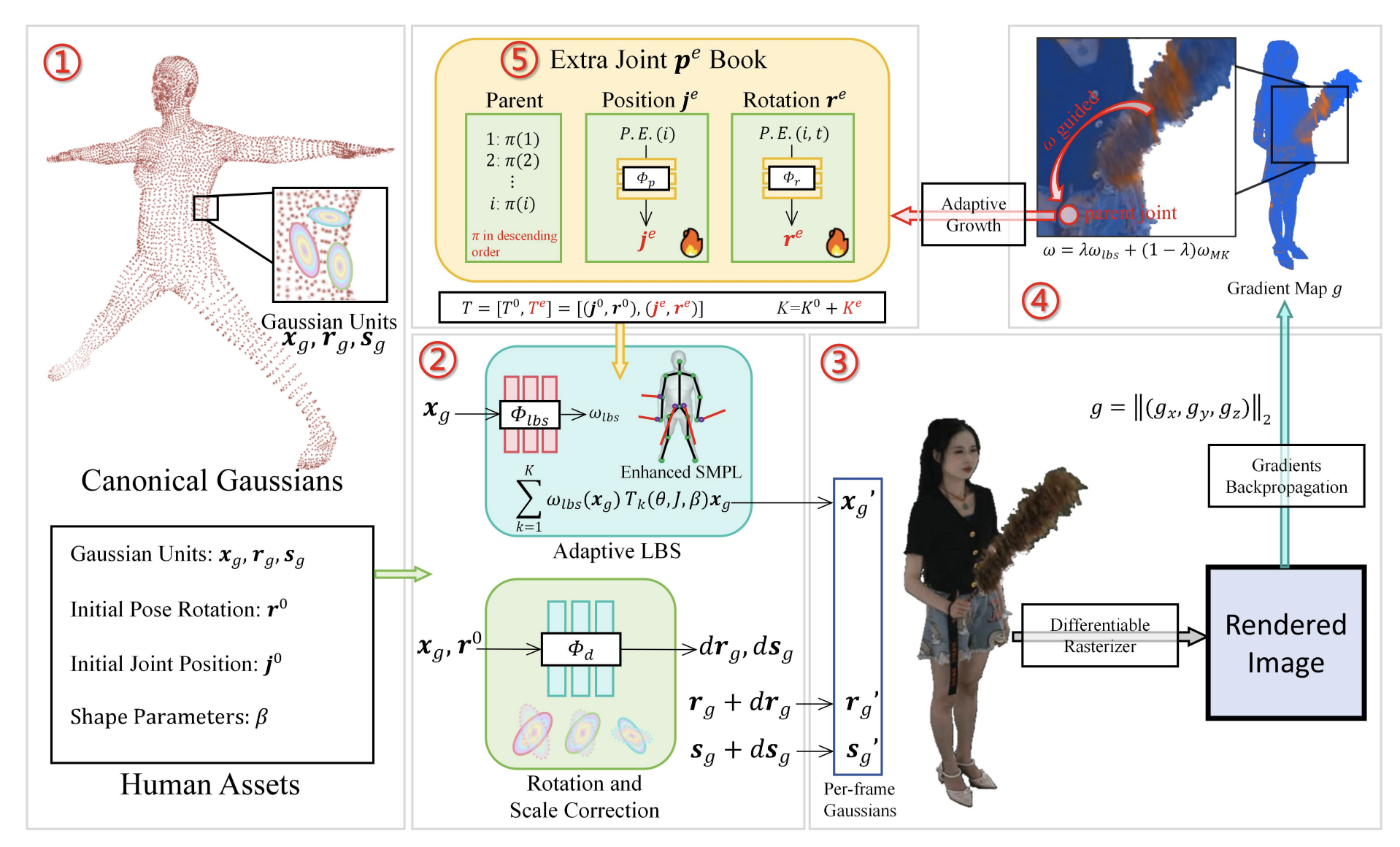

ToMiE: Towards Explicit Exoskeleton for the Reconstruction of Complicated 3D Human Avatars

Yifan Zhan, Qingtian Zhu, Muyao Niu, Mingze Ma, Jiancheng Zhao, Zhihang Zhong, Xiao Sun, Yu Qiao, Yinqiang Zheng ICCV, 2025 paper / code We highlight a critical yet often overlooked factor in most 3D human tasks, namely modeling complicated 3D human with with hand-held objects or loose-fitting clothing by modeling the exoskeleton of the human body. |

|

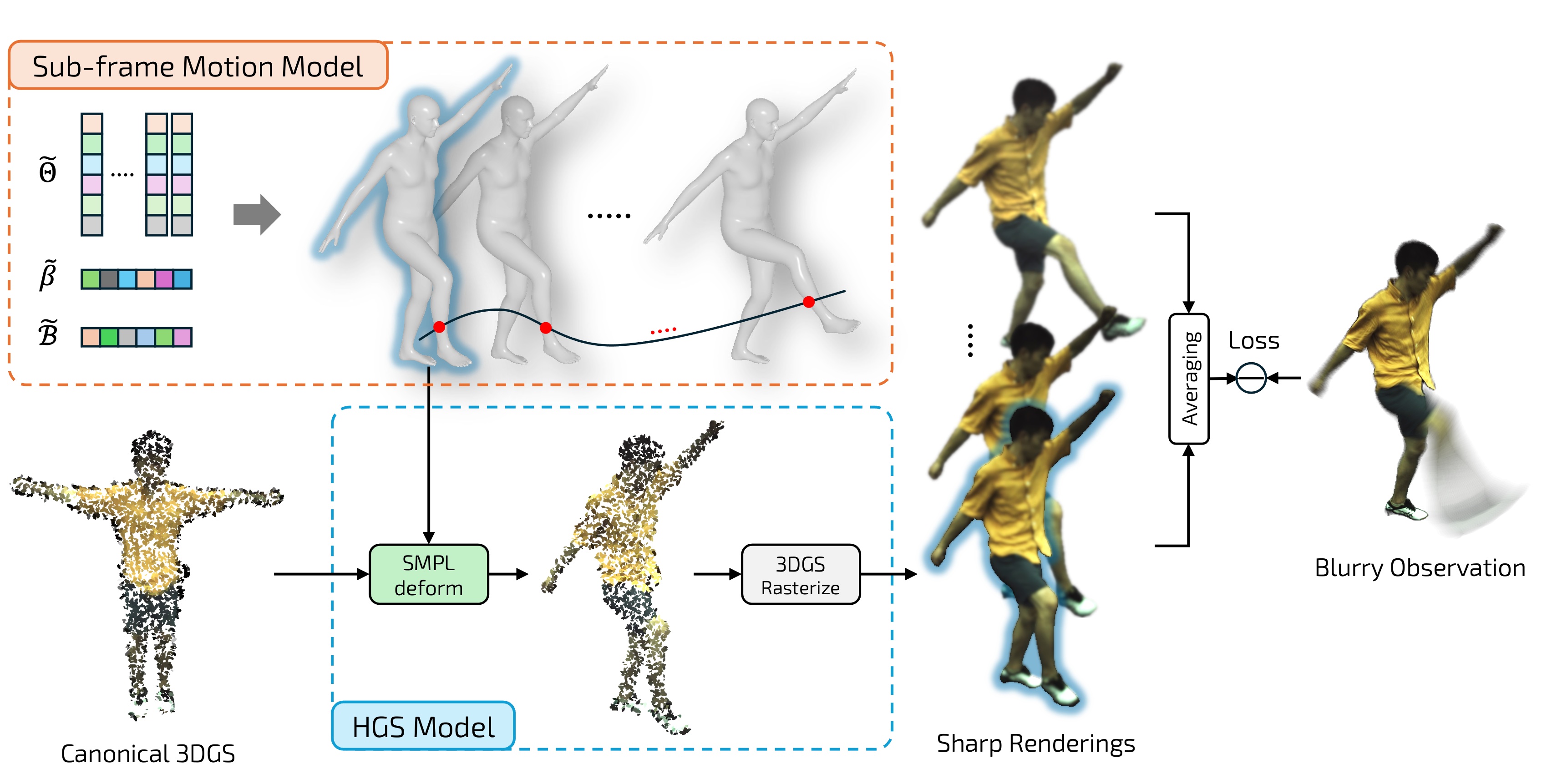

Bundle Adjusted Gaussian Avatars Deblurring

Muyao Niu, Yifan Zhan, Qingtian Zhu, Zhuoxiao Li, Wei Wang, Zhihang Zhong, Xiao Sun, Yinqiang Zheng arXiv, 2024 paper / code We introduce an innovative method for deriving sharp intrinsic 3D human Gaussian avatars from blurry videos via 3D human motion model and the physics-based blur formation model. |

|

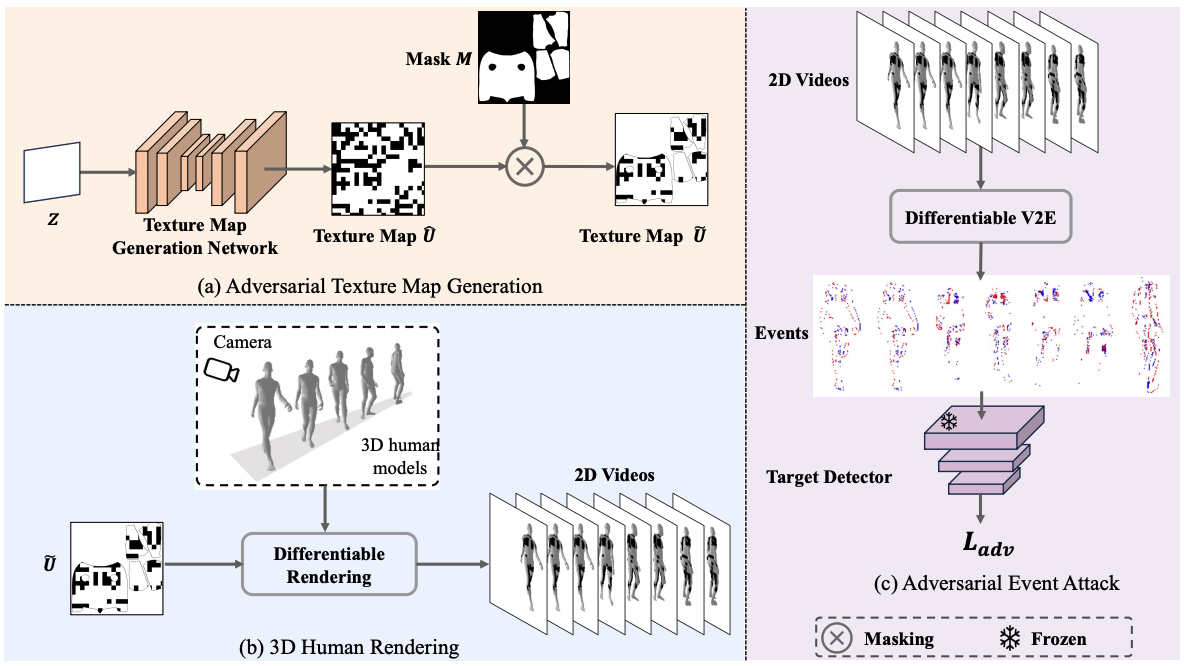

Adversarial Attacks on Event-Based Pedestrian Detectors: A Physical Approach

Guixu Lin, Muyao Niu, Qingtian Zhu, Zhengwei Yin, Zhuoxiao Li, Shengfeng He, Yinqiang Zheng AAAI, 2025 paper page / code We developed an end-to-end adversarial framework for event-driven pedestrian detection, framing the design of adversarial clothing textures as a 2D texture optimization problem. |

|

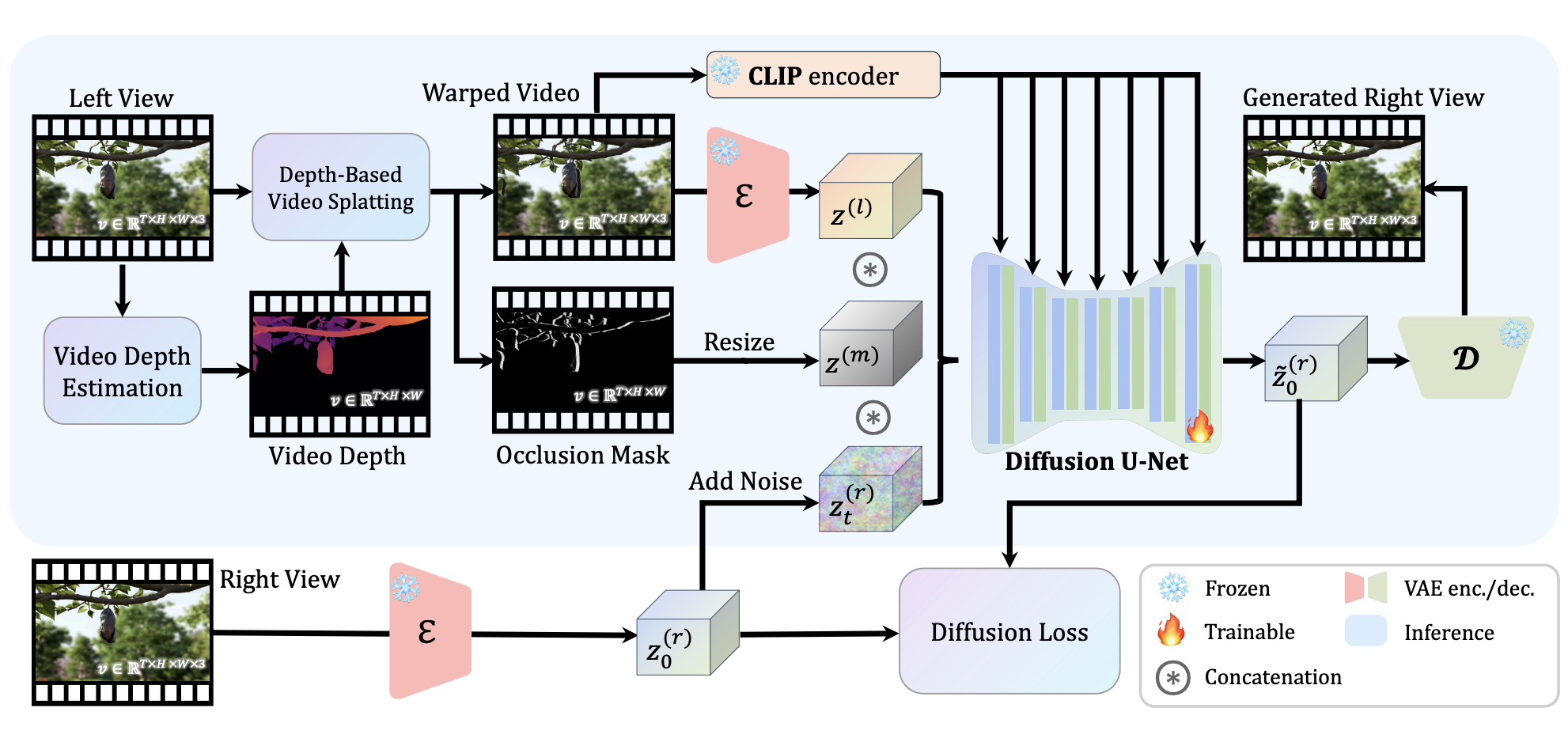

StereoCrafter: Diffusion-based Generation of Long and High-fidelity Stereoscopic 3D from Monocular Videos

Sijie Zhao*, Wenbo Hu*, Xiaodong Cun*, Yong Zhang#, Xiaoyu Li#, Zhe Kong, Xiangjun Gao, Muyao Niu, Ying Shan arXiv (Technical Report), 2024 project page / paper We present a novel framework for converting 2D videos to immersive stereoscopic 3D, addressing the growing demand for 3D content in immersive experience. Leveraging foundation models as priors, our approach boosts the performance to ensure the high-fidelity generation required by the display devices. |

|

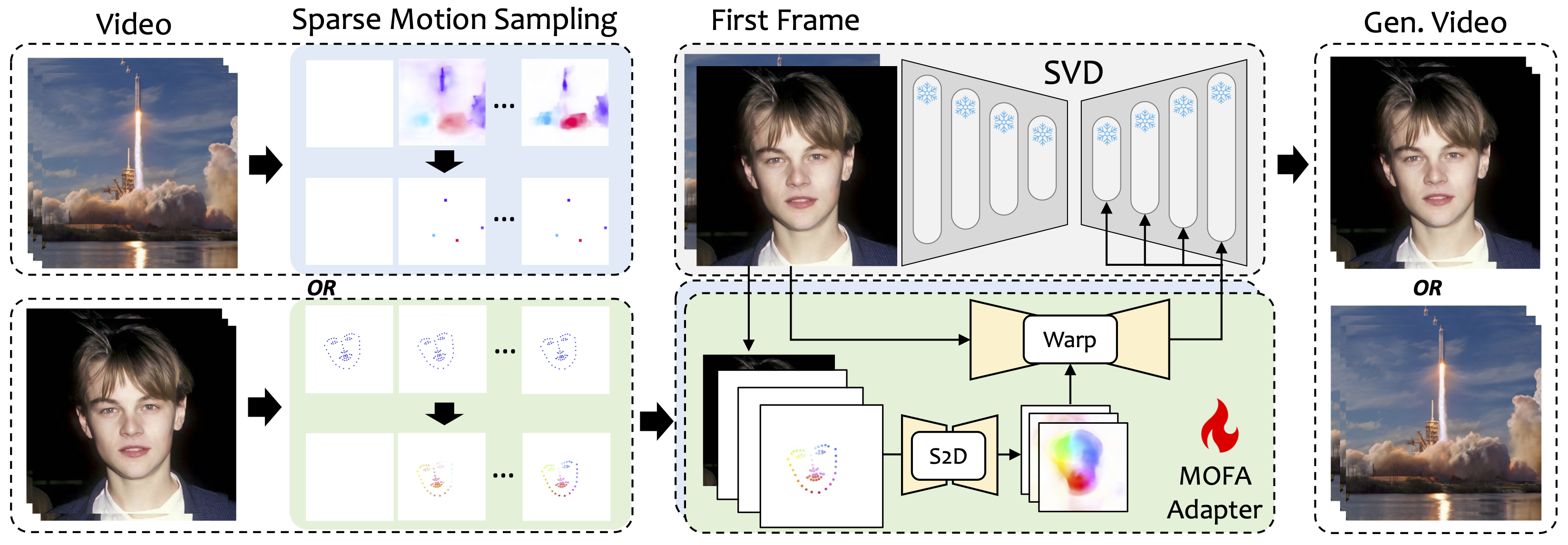

MOFA-Video: Controllable Image Animation via Generative Motion Field Adaptions in Frozen Image-to-Video Diffusion Model

Muyao Niu, Xiaodong Cun, Xintao Wang, Yong Zhang, Ying Shan, Yinqiang Zheng ECCV, 2024 project page / paper / code We introduce MOFA-Video to adapt motions from different domains to the frozen Video Diffusion Model. MOFA-Video can effectively animate a single image using various types of control signals, including trajectories, keypoint sequences, and their combinations. |

|

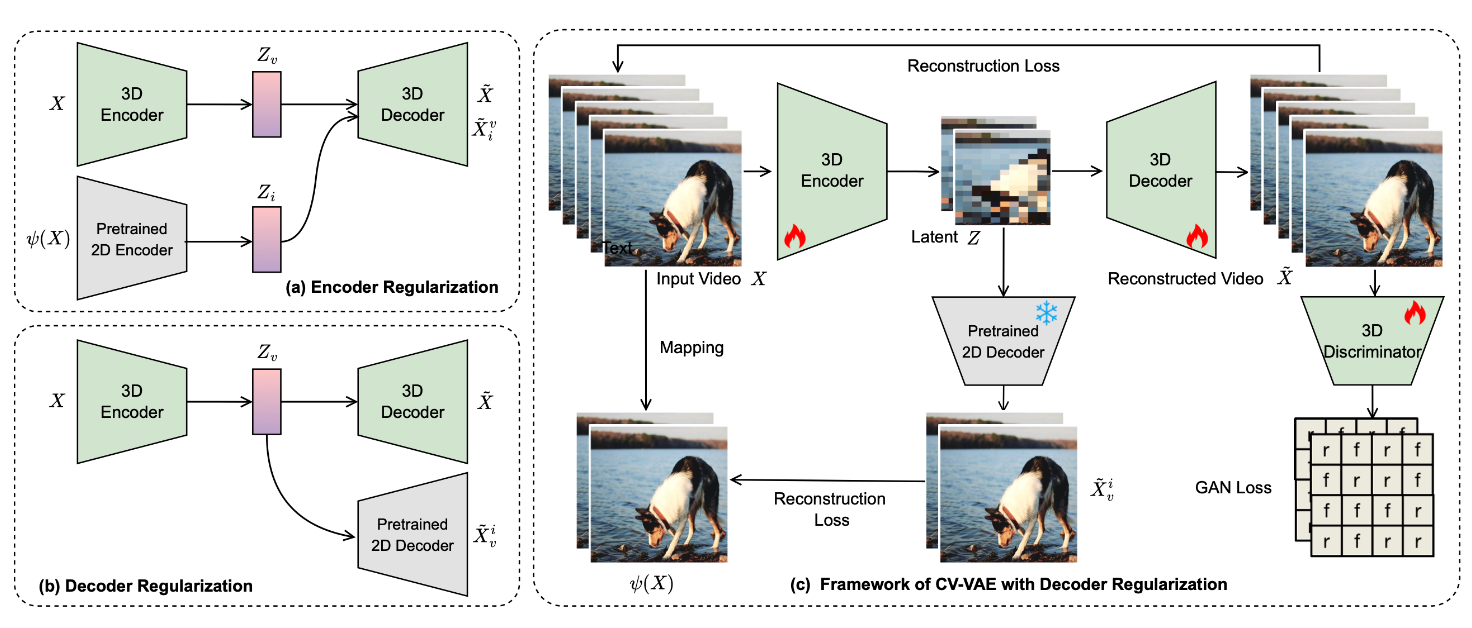

CV-VAE: A Compatible Video VAE for Latent Generative Video Models

Sijie Zhao, Yong Zhang, Xiaodong Cun, Shaoshu Yang, Muyao Niu, Xiaoyu Li, Wenbo Hu, Ying Shan NeurIPS, 2024 project page / paper / code We propose CV-VAE that is compatible with existing image and video models trained with SD image VAE. Our video VAE provides a truly spatio-temporally compressed latent space for latent generative video models, as opposed to uniform frame sampling. |

|

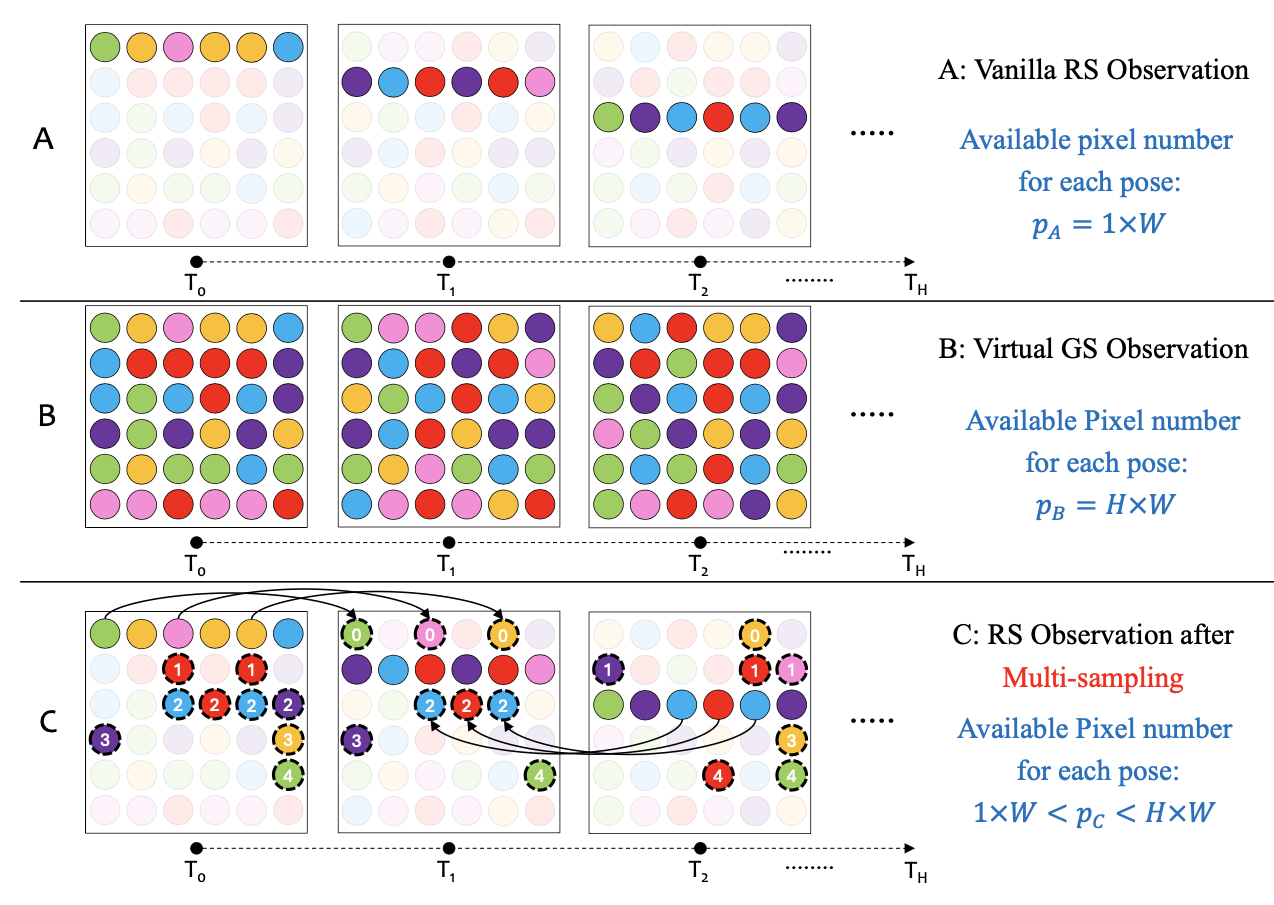

RS-NeRF: Neural Radiance Fields from Rolling Shutter Images

Muyao Niu, Tong Chen, Yifan Zhan, Zhuoxiao Li, Xiang Ji, Yinqiang Zheng ECCV, 2024 paper / code We improve NeRF to consider the RS distortions with two technologies: camera trajectory smoothness regularization and multi-sampling strategy. |

|

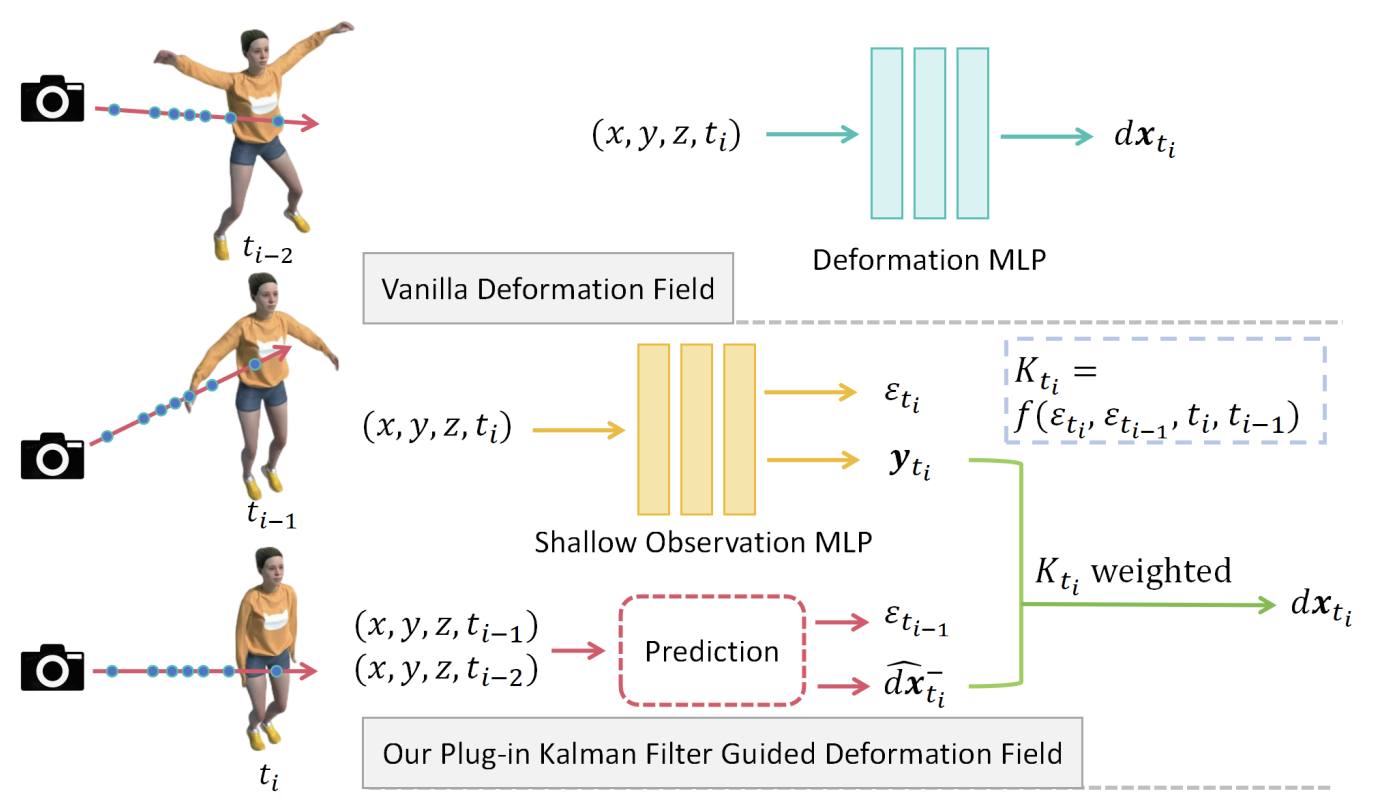

KFD-NeRF: Rethinking Dynamic NeRF with Kalman Filter

Yifan Zhan, Zhuoxiao Li, Muyao Niu, Zhihang Zhong, Shohei Nobuhara, Ko Nishino, Yinqiang Zheng ECCV, 2024 paper / code We combine dynamic neural radiance field with a motion reconstruction framework based on Kalman filtering, enabling accurate deformation estimation from scene observations and predictions. |

|

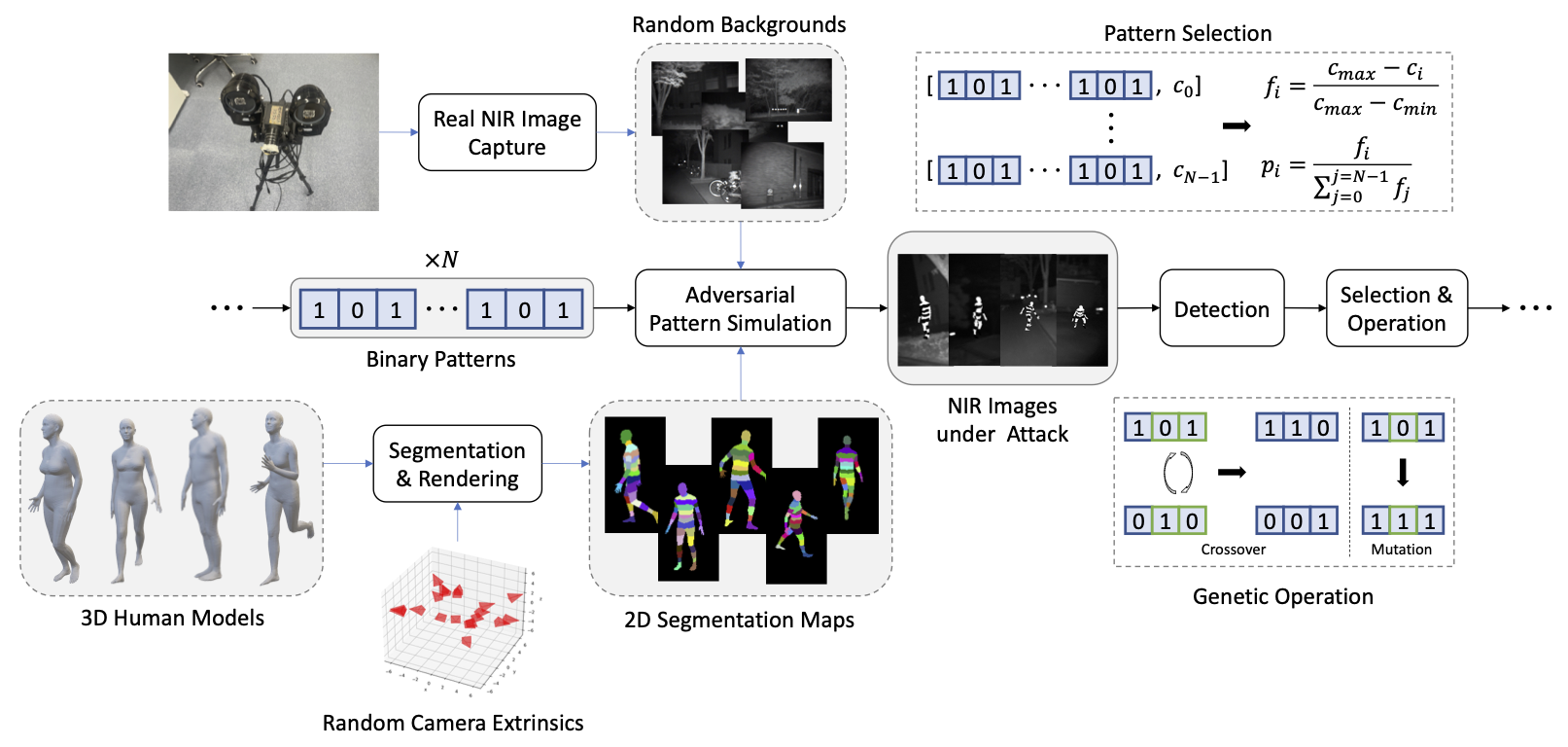

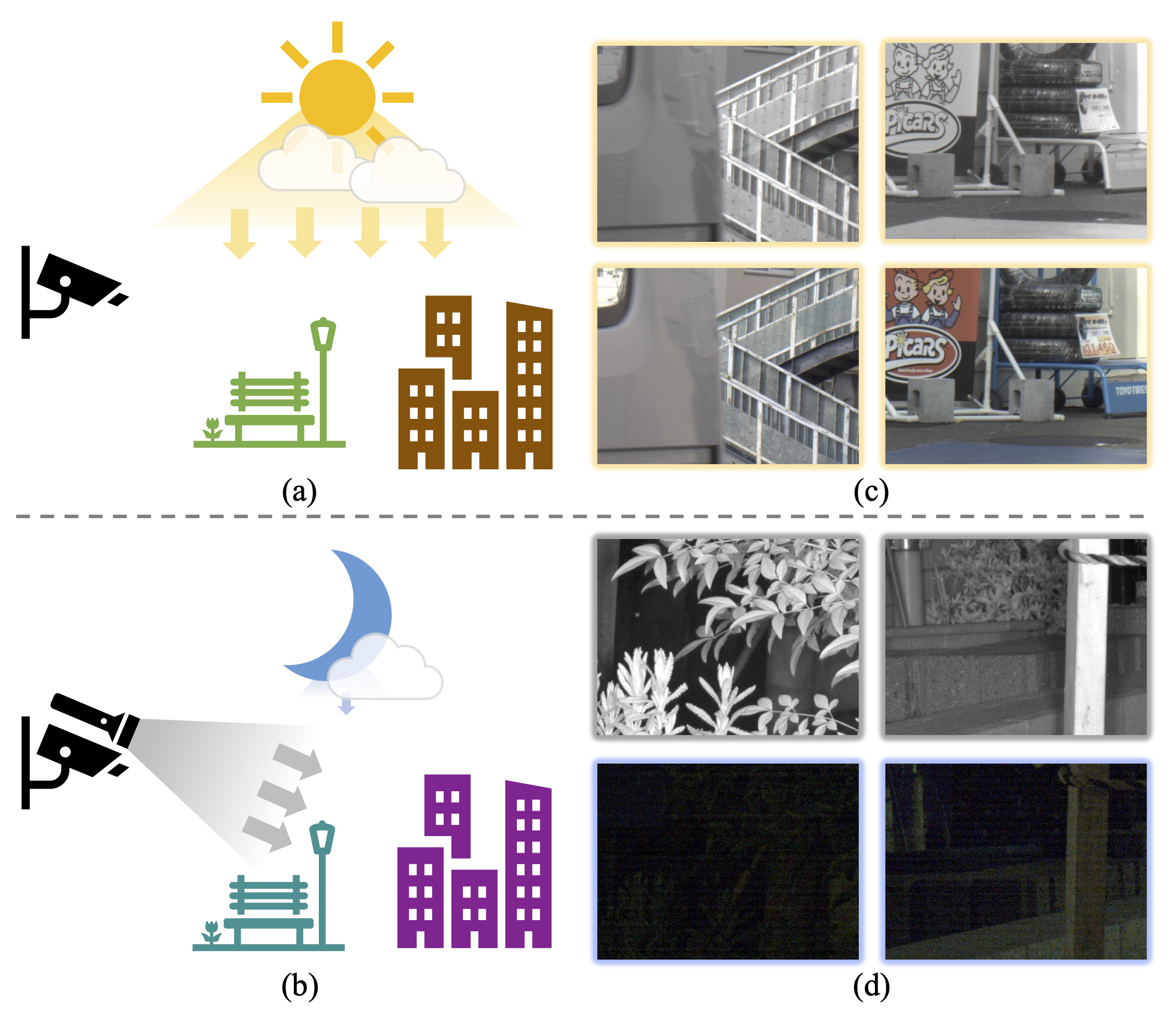

Physics-Based Adversarial Attack on Near-Infrared Human Detector for Nighttime Surveillance Camera Systems

Muyao Niu, Zhuoxiao Li Yifan Zhan, Huy H. Nguyen, Isao Echizen, Yinqiang Zheng ACM MM, 2023 paper / code We introduced an innovative approach that passively manipulates the intensity distribution of NIR images and developed a 3D-aware, black-box attack algorithm to target deep learning-based NIR-powered human detection systems. |

|

NIR-assisted Video Enhancement via Unpaired 24-hour Data

Muyao Niu, Zhihang Zhong, Yinqiang Zheng ICCV, 2023 paper / code We addressed the issue of collecting data for utilizing NIR images to improve low-light VIS videos. Physiscs-inspired algorithms are designed to simulate pseudo paired data of NIR and VIS images, simulating day-to-night situations. We then trained an enhancement network using the generated pseudo data. |

|

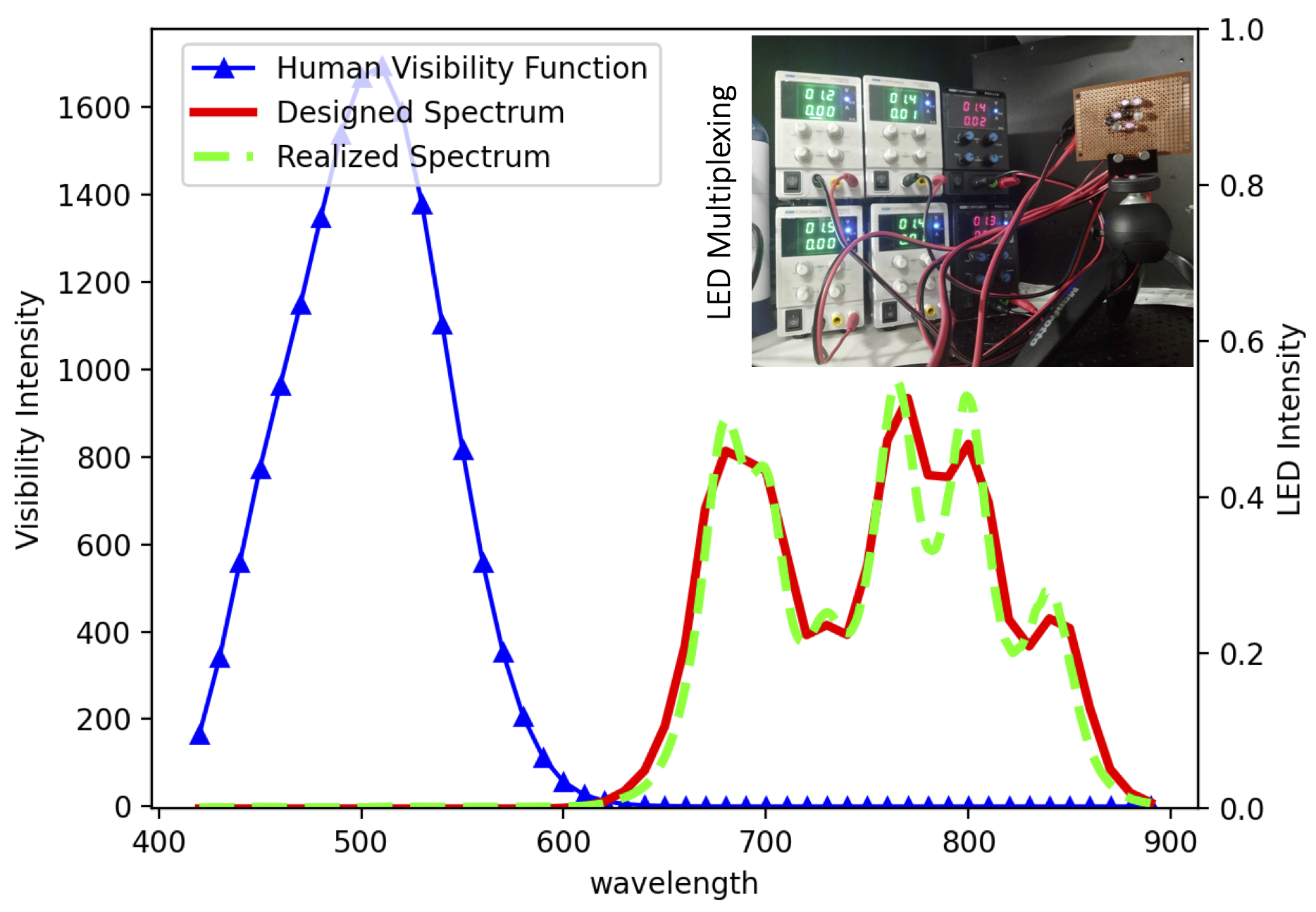

Visibility Constrained Wide-band Illumination Spectrum Design for Seeing-in-the-Dark

Muyao Niu, Zhuoxiao Li, Zhihang Zhong, Yinqiang Zheng CVPR, 2023 paper / code We designed an optimal illumination spectrum in the VIS-NIR range by considering human vision constraints, which significantly improves translation performance. A fully differentiable model was proposed, which includes the imaging process, human visual perception, and the enhancement network. |

|

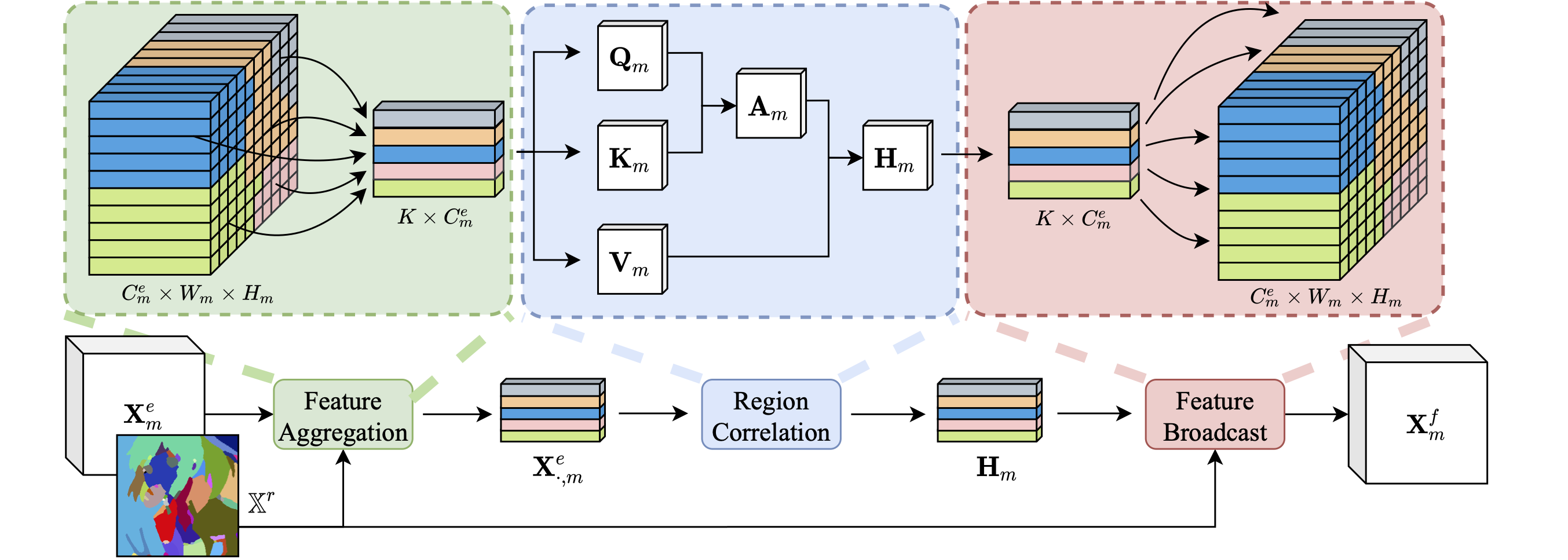

Region Assisted Sketch Colorization

Ning Wang*, Muyao Niu*, Zhihui Wang, Kun Hu, Bin Liu, Zhiyong Wang, Haojie Li TIP, 2023 paper We proposed the Region-Assisted Sketch Colorization (RASC) method, which uses a 'Region Map' to better utilize regional information within the sketch, enhancing the perception of region-wise features. |

|

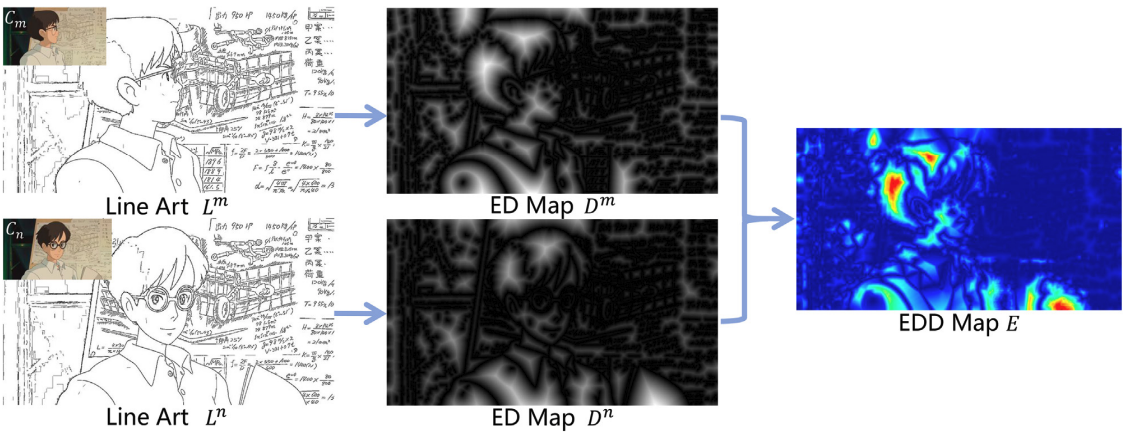

Coloring anime line art videos with transformation region enhancement network

Ning Wang*, Muyao Niu*, Zhi Dou, Zhihui Wang, Zhiyong Wang, Zhaoyan Ming, Bin Liu, Haojie Li Pattern Recognition, 2023 paper We propose a multi-scale Transformation Region Enhancement Network (TRE-Net) to enhance the learning on geometric transformation regions. |

Back to Homepage |

|

The template comes from the personal website of Jon Barron. |